CloudNet 가시다님이 진행하는 Kubernetes Advanced Network Study 6주차 정리입니다.

3.7 카나리 업그레이드

▶ 배포 자동화 지원(최소 중단, 무중단) - 롤링 업데이트, 카나리 업데이트, 블루/그린 업데이트

1. 롤링 업데이트

- 애플리케이션이 실행 중인 인프라를 완전히 교체하여 이전 버전의 애플리케이션을 새로운 버전의 애플리케이션으로 서서히 교체하는 배포 전략

- 사용중인 인스턴스들을 하나씩 점진적으로 새로운 버전으로 교체하는 방식

- 사용중인 인스턴스 하나를 로드밸런서에 라우팅하지 않도록 한 뒤, 새 버전으로 다시 라우

- 가용 자원이 제한적일 경우 사용

2. 카나리 업데이트

- 전체 인프라에 새로운 소프트웨어 버전을 릴리즈하여 모든 사용자가 사용할 수 있도록 하기 전에 변경 사항을 천천히 릴리즈함으로써 프로덕션 환경에 새로운 소프트웨어 버전을 도입하는 위험을 줄이는 기술이다.

- 잠재적 문제 상황을 미리 발견하기 위한 배포 방식

- 일부만 신버전으로 교체해서 모니터링하고 오류가 없다면 나머지 인스턴스를 신버전으로 교체하는 방식

3. 블루/그린 업데이

- 이전 버전을 blue 환경으로, 새 버전은 green 환경이라 부르며 한 번에 하나의 버전만 게시된다.

- 동일한 서버를 미리 구축한 뒤, 라우팅을 순간적으로 전환하여 새로운 버전을 배포하는 방식이다.

- 빠른 롤백이 가능하고 운영 환경을 유지하며 새로 배포될 버전의 테스트도 가능하지만, 자원이 두배로 필요해 비용이 많이 발생한다.

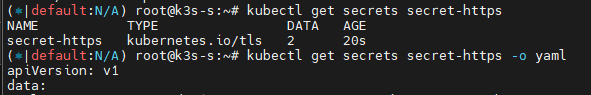

3.8 HTTPS 처리 (TLS 종료)

[ 도전해보기 ] - https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/

4. Gateway API 소개

Gateway API의 주요 기능

1. 개선된 리소스 모델

: API는 GatewayClass, Gateway 및 Route(HTTPRoute, TCPRoute 등)와 같은 새로운 사용자 정의 리소스를 도입하여 라우팅 규칙을 정의하는 보다 세부적이고 표현력 있는 방법을 제공합니다.

2. 프로토콜 독립적

: 주로 HTTP 용으로 설계된 Ingress와 달리 Gateway API는 TCP, UDP, TLS를 포함한 여러 프로토콜을 지원합니다.

3. 강화된 보안

: TLS 구성 및 보다 세부적인 액세스 제어에 대한 기본 제공 지원.

4. 교차 네임스페이스 지원

: 서로 다른 네임스페이스의 서비스로 트래픽을 라우팅하여 보다 유연한 아키텍처를 구축할 수 있는 기능을 제공합니다.

5. 확장성

: API는 사용자 정의 리소스 및 정책으로 쉽게 확장할 수 있도록 설계되었습니다.

6. 역할 지향

: 클러스터 운영자, 애플리케이션 개발자, 보안 팀 간의 우려를 명확하게 분리합니다.

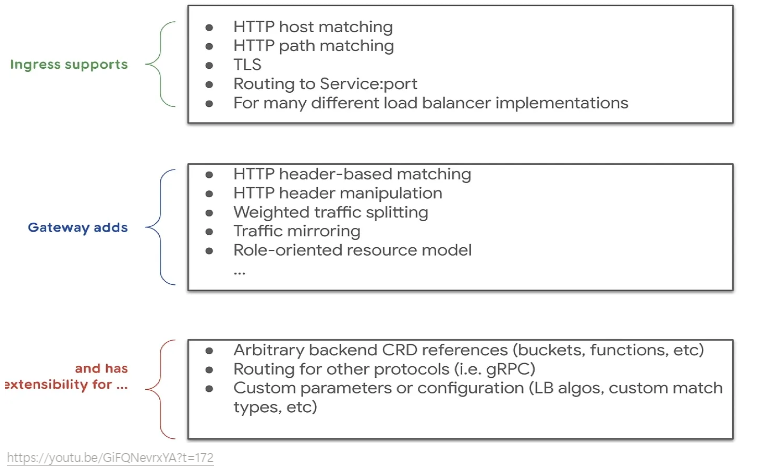

Gateway API 소개 : 기존의 Ingress에 좀 더 기능을 추가, 역할 분리(role-oriented)

- 서비스 메시(istio)에서 제공하는 Rich 한 기능 중 일부 기능들과 혹은 운영 관리에 필요한 기능들을 추가

- 추가 기능 : 헤더 기반 라우팅, 헤더 변조, 트래픽 미러링(쉽게 트래픽 복제), 역할 기반

- Gateway API is a family of API kinds that provide dynamic infrastructure provisioning and advanced traffic routing.

- Make network services available by using an extensible, role-oriented, protocol-aware configuration mechanism.

- Gateway API is an add-on containing API kinds that provide dynamic infrastructure provisioning and advanced traffic routing.

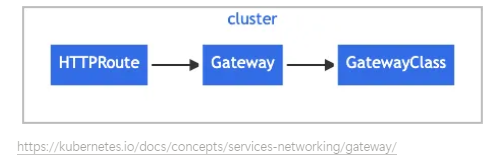

구성 요소(Resource)

- GatewayClass, Gateway, HTTPRoute, TCPRoute, Service

- GatewayClass : Defines a set of gateways with common configuration and managed by a controller that implements the class.

- Gateway : Defines an instance of traffic handling infrastructure, such as cloud load balancer.

- HTTPRoute : Defines HTTP - specific rules for mapping traffic from a Gateway listener to a representation of backend network endpoints. These endpoints are often represented as a Service.

- Kubernetes Traffic management : Combining Gateway API with Service Mesh for North-South and East-West Use Cases

- Request flow

Why does a role-oriented API matter?

- 담당 업무의 역할에 따라서 동작/권한을 유연하게 제공할 수 있음

- 아래 그림 처럼 '스토어 개발자'는 Store 네임스페이스내에서 해당 store PATH 라우팅 관련 정책을 스스로 관리 할 수 있음

- Infrastructure Provider : Manages infrastructure that allows multiple isolated clusters to serve multiple tenants, e.g. a cloud provider.

- Cluster Operator : Manages clusters and is typically concerned with policies, network access, application permissions, etc.

- Application Developer : Manages an application running ini a cluster and is typically concerned with application-level configuration and Service composition.

5. Gloo Gateway

▶ Gloo Gateway Architecture : These components work together to translate Gloo and Kubernetes Gateway API custom resources into Envoy configuration

1. The config and secret watcher components in the gloo pod watch the cluster for new kubernetes Gateway API and Gloo Gateway resources, such as Gateways, HTTPRoutes, or RouteOptions.

2. When the config or secret watcher detect new or updated resources, it sends the resource configuration to the Gloo Gateway translation engine.

3. The translation engine translates kubernetes Gateway API and Gloo Gateway resources into Envoy configuration. All Envoy configuration is consolidated into an xDS snapshot.

4. The reporter receives a status report for every resource that is processed by the translator.

5. The reporter writes the resource status back to the etcd data store.

6. The xDS snapshot is provided to the Gloo Gateway xDS server component in the gloo pod.

7. Gateway proxies in the cluster pull the latest Envoy configuration from the Gloo Gateway xDS server.

8. Users send a request to the IP address or hostname that the gateway proxy is exposed on.

9. The gateway proxy uses the listener and route-specific configuration that was provided in the xDS snapshot to perform routing decisions and forward requests to destinations in the cluster.

Translation engine

1. The translation cycle starts by defining Envoy clusters from all configured Upstream and kubernetes service resources. Clusters in this context are groups of similar hosts. Each Upstream has a type that determines how the Upstream is processed. Correctly configured Upstreams and Kubernetes services are converted into Envoy clusters that match their type, including information like cluster metadata.

2. The next step ini the translation cycle is to process all the functions on each Upstream. Function-specific cluster metadata is added and is later processed by function-specific Envoy filters.

3. In the next step, all Envoy routes are generated. Routes are generated for each route rule that is defined on the HTTPRoute and RouteOption resources. When all of the routes are created, the translator process any VirtualHostOption, ListenerOption, and HttpListenerOption resources, aggregates them into Envoy virtual hosts, and adds them to a new Envoy HTTP Connection Manager configuration.

4. Filter plug-inis are queried for their filter configurations, generating the list of HTTP and TCP Filters that are added to the Envoy listenrs.

5. Finally, an xDS snapshot is composed of the all the valid endpoints (EDS), clusters(CDS), route configs (RDS), and listeners(LDS). The snapshot is sent to the Gloo Gateway xDS server. Gateway proxies in your cluster watch the xDS server for new config. When new config is detected, the config is pulled into the gateway proxy.

Deployment patterns

1. Simple ingress

2. Shared gateway

3. Sharded gateway with central ingress

- 기존 설정에 따라 중앙 인그레스 엔드포인트로 다른 유형의 프록시를 사용하고 싶을 수 있습니다

- 예를 들어 모든 트래픽이 통과해야 하는 HAProxy 또는 AWS NLB/ALB 인스턴스가 있을 수 있습니다

4. API gateway for a service mesh

▶ [Tutorial] Hands-On with the Kubernetes Gateway API and Envoy Proxy

Kubernetes-hosted application accessible via a gateway configured with policies for routing, service discovery, timeouts, debugging, access logging, and observability

Install KinD Cluster

Install Gateway API CRDs : The Kubernetes Gateway API abstractions are expressed using Kubernetes CRDs.

Install Glooctl Utility : GLOOCTL is a command-line utility that allows users to view, manage, and debug Gloo Gateway deployments

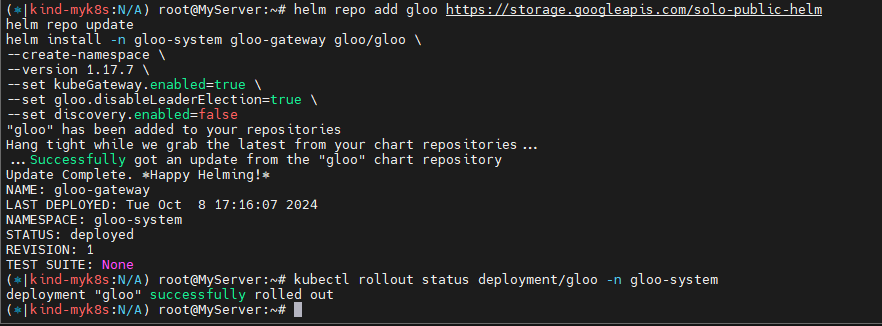

Install Gloo Gateway : 오픈 소스 버전

Install Httpbin Application : A siimple HTTP Request & Response Service

Gateway API kinds

- GatewayClass : Defines a set of gateways with common configuration and managed by a controller that implements the class.

- Gateway : Defines an instance of traffic handling infrastructure, such as cloud load balancer.

- HTTPRoute : Defines HTTP-specific rules for mapping traffic from a Gateway listener to a representation of backend network endpoints. These endpoints are often represented as a Service.

Control : Envoy data plane and the Gloo control plane.

- Now we'll configure a Gateway listener, establish external access to Gloo Gateway, and test the routing rules that are the core of the proxy configuration.

Configure ad Gateway Listener

- Let's begin by establishing a Gateway resource that sets up an HTTP listener on port 8080 to expose routes from all our namespaces. Gateway custom resources like this are part of the Gateway API standard.

Establish External Access to Proxy

Configure simple Routing with an HTTPRoute

Let's begin our routing configuration with the simplest possible route to expose the /get operation on httpbin

HTTPRoute is one of the new Kubernetes CRDs introduced by the Gateway API, as documented here. We'll start by introducing a simple HTTPRoute for our service.

HTTPROute Spec

- ParentRefs - Define which Gateways this Route wants to be attached to. (게이트쪽과 연결)

- Hostnames (optional) - Define a list of hostnames to use for matching the Host header of HTTP requests.

- Rules - Define a list of rules to perform actions against matching HTTP reuqests.

- Each rule consists of matches, filters (optional), backendRefs(optional) and timeouts(optional) fileds.

This example attaches to the default Gateway object created for us when we installed Gloo Gateway earlier.

See the gloo-system/http reference in the parentRefs stanza.

The Gateway object simply represents a host:port listener that the proxy will expose to accept ingress traffic.

Test the Simple Route with Curl

Note that if we attempt to invoke another valid endpoint /delay on the httpbin service, it will fail with a 404 Not Found error. why? Because our HTTPRoute policy is only exposing access to /get, one of the many endpoints available on the service. If we try to consume an alternative httpbin endpoint like /delay.

: rule에서 Exact patch match 부분 때문에 error 발생 > Exact match가 /get으로 되어있어서 /delay 시 error 발생

: envoy 통해서는 접속 불가하지만 NodePort 이용하여 직접 접속 시 접속 성공

[ 정규식 패턴 매칭 ] Explore Routing with Regex Matching Patterns

let's assume that now we DO want to expose other httpbin endpoints like /delay. Our initial HTTPRoute is inadequate, because it is looking for an exact path match with /get.

We'll modify it in a couple of ways. First, we'll modify the matcher to look for path prefix matches instead of an exact match. second, we'll add a new request filter to rewrite the matched /api/httpbin/ prefix with just a / prefix, which will give us the flexibility to access any endpoint available on the httpbin service. So a path like /api/httpbin/delay/1 will be sent to httpbin with the path /delay/1.

# Here are the modifications we’ll apply to our HTTPRoute:

- matches:

# Switch from an Exact Matcher(정확한 매팅) to a PathPrefix (경로 매팅) Matcher

- path:

type: PathPrefix

value: /api/httpbin/

filters:

# Replace(변경) the /api/httpbin matched prefix with /

- type: URLRewrite

urlRewrite:

path:

type: ReplacePrefixMatch

replacePrefixMatch: /

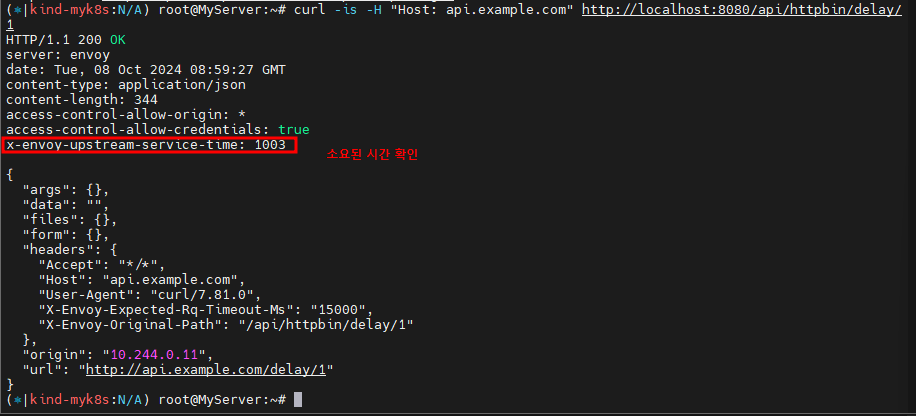

Test Routing with Regex Matching Patterns

When we used only a single route with an exact match pattern, we cloud only exercise the httpbin /get endpoint. Let's now use curl to confirm that both /get and /delay work as expected.

Perfect! it works just as expected! Note that the /delay operation completed successfully and that the 1-second delay was applied. The response header x-envoy-upstream-service-time : 1023 indicates that Envoy reported that the upstream httpbin service required just over 1 second (1,203 milliseconds) to process the request. In the initial /get operation, which doesn't inject an artificial delay, observe that the same header reported only 14 milliseconds of upstream processing time.

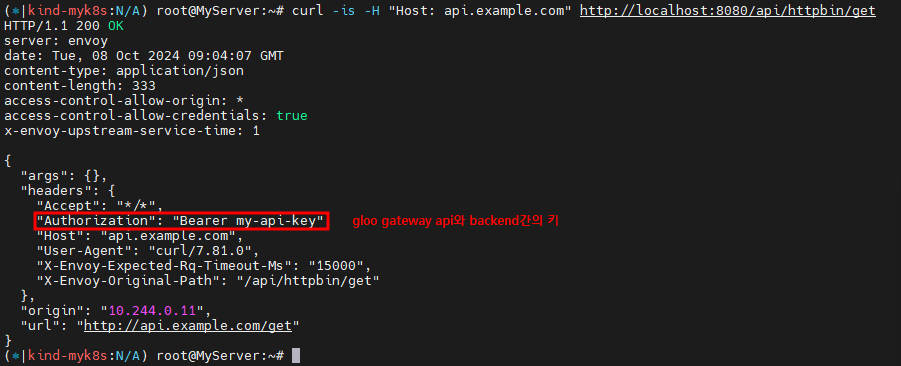

[업스트림 베어러 토큰을 사용한 변환] Test Transformations with Upstream Bearer Tokens

목적 : 요청을 라우팅하는 백엔드 시스템 중 하나에서 인증해야 하는 요구 사항이 있는 경우는 어떻게 할까요?

이 업스트림 시스템에는 권한 부여를 위한 API 키가 필요하고, 이를 소비하는 클라이언트에 직접 노출하고 싶지 않다고 가정해 보겠습니다. 즉, 프록시 계츠에서 요청에 주입할 간단한 베어러 토큰을 구성하고 싶습니다. (정적 API 키 토큰을 직접 주입)

What if we have a requirement to authenticate with one of the backend systems to which we route our requests?

let's assume that this upstream system requires an API key for authorization, and that we don't want to expose this directly to the consuming client. In other words, we'd like to configure a simple bearer token to be injected in to the request at the proxy layer.

We can express this in the Gateway API by adding a filter that applies a simple trafnsformation to the incoming request.

This will be applied along with the URLRewrite filter we created in the previous step.

# The new filters stanza in our HTTPRoute now looks like this:

filters:

- type: URLRewrite

urlRewrite:

path:

type: ReplacePrefixMatch

replacePrefixMatch: /

# Add a Bearer token to supply a static API key when routing to backend system

- type: RequestHeaderModifier

requestHeaderModifier:

add:

- name: Authorization

value: Bearer my-api-key

[Migrate]

In this section, we'll explore how a couple of common service migration techniques, dark launches with header-based routing and canary releases with percentage-based routing, are supported by the Gateway API standard.

Configure Two Workloads for Migration Routing

Let's first establish two versions of a workload to facilitate our migration example. We'll use the open-source Fake Service to enable this.

- Fake Service that can handle both HTTP and gRPC traffic, for testing upstream service communications and testing service mesh and other secnarios.

Let's establish a v1 of our my-workload service that's configured to return a response string containing "v1". We'll create a corresponding my-workload-v2 service as well.

Test Simple V1 Routing

Before we dive into routing to multiple services, we'll start by building a simple HTTPRoute that sends HTTP requests to host api.example.com whose paths begin with /api/my-workload to the v1 workload:

Simulate a v2 Dark Launch With Header-Based Routing

Dark Launch is a great cloud migration techique that release new features to a select subset of users to gather feedback and experiment with improvements before potentially disrupting a larger user community.

- Dark Launch : 일부 사용자에게 새로운 기능을 출시하여 피드백을 수집하고 잠재적으로 더 큰 사용자 커뮤니티를 방해하기 전에 개선 사항을 실험하는 훌륭한 클라우드 마이그레이션 기술

We will simulate a dark launch in our example by installing the new cloud version of our service in our Kubernetes cluster, and then using declarative policy to route only requests containing a particular header to the new v2 instance. The vast majority of users will continue to use the original v1 of the service just as before.

- 우리는 Kubernetes 클러스터에 서비스의 새로운 클라우드 버전을 설치한 다음 선언적 정책을 사용하여 특정 헤더를 포함하는 요청만 새 인스턴스로 라우팅하여 에제에서 다크 런치를 시뮬레이션할 것입니다. 대다수의 사용자는 이전과 마찬가지로 서비스의 v1을 계속 사용할 것 입니다.

rules:

- matches:

- path:

type: PathPrefix

value: /api/my-workload

# Add a matcher to route requests with a v2 version header to v2

# version=v2 헤더값이 있는 사용자만 v2 라우팅

headers:

- name: version

value: v2

backendRefs:

- name: my-workload-v2

namespace: my-workload

port: 8080

- matches:

# Route requests without the version header to v1 as before

# 대다수 일반 사용자는 기존 처럼 v1 라우팅

- path:

type: PathPrefix

value: /api/my-workload

backendRefs:

- name: my-workload-v1

namespace: my-workload

port: 8080Configure two separate routes, one for v1 that the majority of service consumers will still use, and another route for v2 that will be accessed by specifying a request header with name version and value v2. Let's apply the modified HTTPRoute:

: version이라는 키에 exact v2 확인

Expand V2 Testing with Percentage-Based Routing

After a successful dark-launch, we may want a period where we use a blue-green strategy of gradually shifting user traffic from the old version to the new one. Let's explore this with a routing policy that splits our traffic evenly, sending half our traffic to v1 and the other half to v2.

- 성공적인 다크 런칭 이후, 우리는 점진적으로 이전 버전에서 새 버전으로 사용자 트래픽을 옮기는 블루-그린 전략을 사용하는 기간을 우너할 수 있습니다. 트래픽을 균등하게 분할하고 트래픽의 절반을 보내고 v1 나머지 절반을 보내는 라우팅 정책으로 이를 살펴보겠습니다 v2.

We will modify our HTTPRoute to accomplish this by removing the header-based routing rule that drove our dark lauch. Then we will replace that with a 50-50 weight applied to each of the routes, as shown below :

rules:

- matches:

- path:

type: PathPrefix

value: /api/my-workload

# Configure a 50-50 traffic split across v1 and v2 : 버전 1,2 50:50 비율

backendRefs:

- name: my-workload-v1

namespace: my-workload

port: 8080

weight: 50

- name: my-workload-v2

namespace: my-workload

port: 8080

weight: 50

[Debug]

Solve a Problem with Glooctl CLI

A common source of Gloo configuration errors is mistyping an upstream reference, perhaps when copy/pasting it from another source but "missing a spot" when changing the name of the backend service target. In this example, we'll simulate making an error like that, and then demonstrating how glooctl can be used to detect it.

- Gloo 구성 오류의 일반적인 원인은 업스트림 참조를 잘못 입력하는 것입니다. 아마도 다른 소스에서 복사/붙여넣을 때이지만 백엔드 서비스 대상의 이름을 변경할 때 "한 군데를 놓친" 것입니다. 이 에에서 우리는 그런 오류를 만드는 것을 시뮬레이션하고, glooctl 그것을 감지하는 데 어떻게 사용할 수 있는지 보여줍니다.

First, Let's apply a change to simulate the mistyping of an upstream config so that it is targeting a non-existent my-bad-workload-v2 backend service, rather than the correct my-workload-v2.

- my-bad-workload-v2 업스트림 구성의 오타를 시뮬레이션하여 올바른 티켓팅하는 대신 존재하지 않는 백엔드 서비스를 타겟팅하도록 변경

When we test this out, note that the 50-50 traffic split is still in place. This means that about half of the requests will be routed to my-workload-v1 and succeed, while the others will attempt to use the non-existent my-bad-workload-v2 and fail like this:

So we'll deploy one of the first weapons from the Gloo debugging arsenal, the glooctl check utility. It verifies a number of Gloo resources, confirming that they are configured correctly and are interconnected with other resources correctly. For example, in this case, glooctl will detect the error in the mis-connection between the HTTPRoute and its backend target:

[Observe]

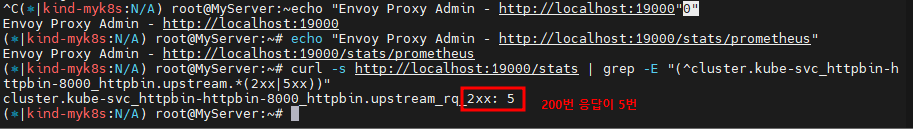

Explore Envoy Metrics

Envoy publishes a host of metrics that may be useful for observing system behavior. In our very modest king cluster for this exercise, you can count over 3,000 individual metrics! You can learn more about them in the Envoy documentation.

For this 30-minute exercise, let's take a quick look at a couple of the useful metrics that Envoy produces for every one of our backend targets.

First, we'll port-forward the Envoy administrative port 19000 to our local workstation:

For this exercise, let's view two of the relevant metrics from the first part of this exercise : one that counts the number of successful (HTTP 2xx) requests processed by our httpbin backend (or cluster, in Envoy terminology), and another that counts the number of requests returing server errors (HTTP 5xx) from that same backend: